- Install spark on windows pip movie#

- Install spark on windows pip install#

Payment processor with work flow state machine using Data using AWS S3, Lambda Functions, Step Functions and DynamoDB.

Install spark on windows pip movie#

Yay!! you read the ratings count for each movie in Movielens data base using a python script.SortedResultsRDD = collections.OrderedDict(sorted(ems()))įor rddKey, rddValue in ems(): SConf = SparkConf().setMaster(“local”).setAppName(“RatingsRDDApp”)ĪlllinesRDD = sContext.textFile(“/home/user/bigdata/datasets/ml-100k/u.data”)ĪllratingsRDD =alllinesRDD.map(lambda line: line.split()) from pyspark import SparkConf, SparkContext.correct the path of the u.data file in ml-100k folder in the script:.

Yay!!!, you tested by running word count on file README.md.spark-shell – it should run scala version When you create a cluster with JupyterHub on Amazon EMR, the default Python 3 kernel for Jupyter along with the PySpark and Spark kernels for Sparkmagic are.

Install spark on windows pip install#

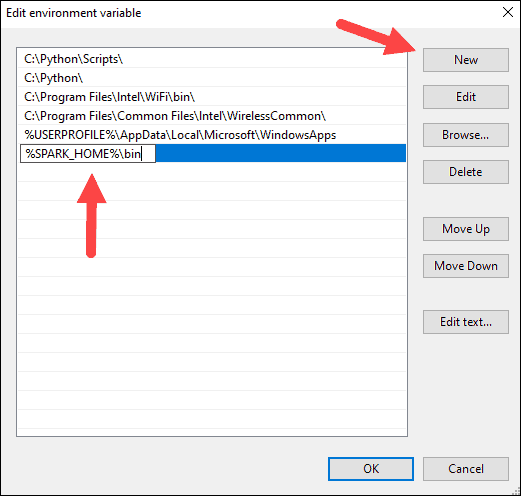

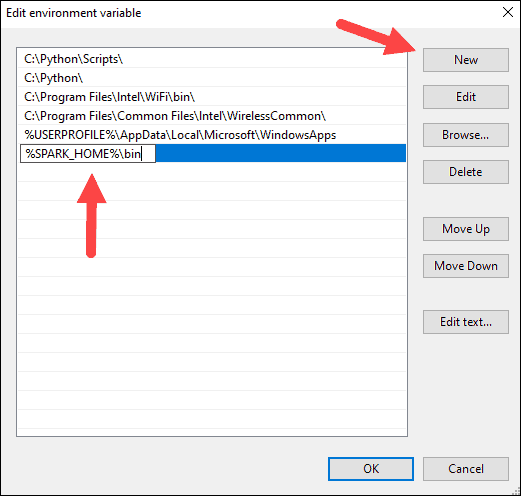

then reload bash file – source ~/.bashrc Install Java 8 Before you can start with spark and hadoop, you need to make sure you have java 8 installed, or to install it. Update PATHS by updating file ~/.bashrc:. Rename spark-2.3.0-bin-hadoop2.7 to spark – mv spark-2.3.0-bin-hadoop2.7 spark. Unzip the tar – tar xvfz spark-2.3.0-bin-hadoop2.7.tgz. Now download proper version of Spark(First go to and then copy the link address) – wget. echo “alias python=python36” > ~/.bashrc. Setup alias for python command and update the ~/.bashrc. To install JDK8- yum install -y java-1.8.0-openjdk-devel. To install JRE8- yum install -y java-1.8.0-openjdk. Type and Enter quit() to exit the spark. If you get successful count then you succeeded in installing Spark with Python on Windows. Type and Enter myRDD= sc.textFile(“README.md”). Look for README.md or CHANGES.txt in that folder. Select environment for Windows(32 bit or 64 bit) and download 3.5 version canopy and install. Right-click Windows menu –> select Control Panel –> System and Security –> System –> Advanced System Settings –> Environment Variables. execute command – winutils.exe chmod 777 \tmp\hive from that folder.

Edit the file to change log level to ERROR – for log4j.rootCategory. Rename file conf\ file to log4j.properties. Once this command is executed the output will show the java version and the output will be as follows: In case we are not having the SDK. Now run the following command: java -version. Just go to the Command line (For Windows, search for cmd in the Run dialog ( + R ). Now lets unzip the tar file using WinRar or 7Z and copy the content of the unzipped folder to a new folder D:\Spark We need to verify this SDK packages and if not installed then install them. Lets select Spark version 2.3.0 and click on the download link. Install JDK, but make sure your installation folder should not have spaces in path name e.g d:\jdk8. Select your environment ( Windows x86 or 圆4).

0 kommentar(er)

0 kommentar(er)